Celebrating 30 years as a Linux user

In this post, I'll share my 30 year journey with Linux and explore how it shaped my future as a developer. Along the way, we'll take a nostalgic stroll down computer hardware memory lane. We'll touch on challenges with legacy MS Windows systems today, in the public sector. In a followup post we'll look at various useful Linux/macOS commands for GIS and geodata wrangling...

These views and opinions are my solely my own, and do not represent my employer.

What is Linux

Linux has been around since the mid-1990s and has since reached a user-base that spans the globe. Linux is actually everywhere: It's in your phones, your thermostats, in your cars, refrigerators, Roku devices, and televisions. It also runs most of the Internet, all of the world's top 500 supercomputers, and the world's stock exchanges. Source: The Linux Foundation

This is expanded below in Postscript: Linux won the Internet and developer mind-share.

Hello, Tux

Supposedly Linus Torvalds, the inventor of Linux, was inspired by a different penguin illustration, which appears in this wikipedia article. The Tux mascot later evolved from that and became the official Linux icon and logo shown here. [image attribution: lewing@isc.tamu.edu Larry Ewing and The GIMP, CC0, via Wikimedia Commons]

'94 Linux appears on the scene

The Unix philosophy

Linux and Unix are very similar. Unix has been around since the '70s. Unix is fundamentally a programming environment that unifies user and development spaces. It's philosophy centers on creating programs that:

- Perform single tasks effectively

- Work together through pipelines

- Process text streams

- Function as filters (input to output)

- Prioritize portability over efficiency

The system is designed to be literate and self-documenting through:

- Integrated documentation (man pages, help flags)

- Built-in command inspection tools

- Interactive learning features

- Human-readable source code and configuration

- Transparent system structure

This design creates an environment where using the system and developing for it are seamlessly integrated, making Unix highly extensible and learnable.

Circa '94, just out of college, I got really interested in Linux, and wanted to get into programming. I asked my father "What programming language do you think I should I learn?". He had some earlier experience with software projects in the field of ecological and weather modeling. He considered it for long pause, and then said "I think you should learn C!".

Even though I never mastered the C language, it was the right guidance. Knowing a modicum of C means you understand compilers, libraries, static and dynamic linking, cache hierarchy, pointers, storage, I/O, etc. With that foundation you can understand any other programming language or operating system.

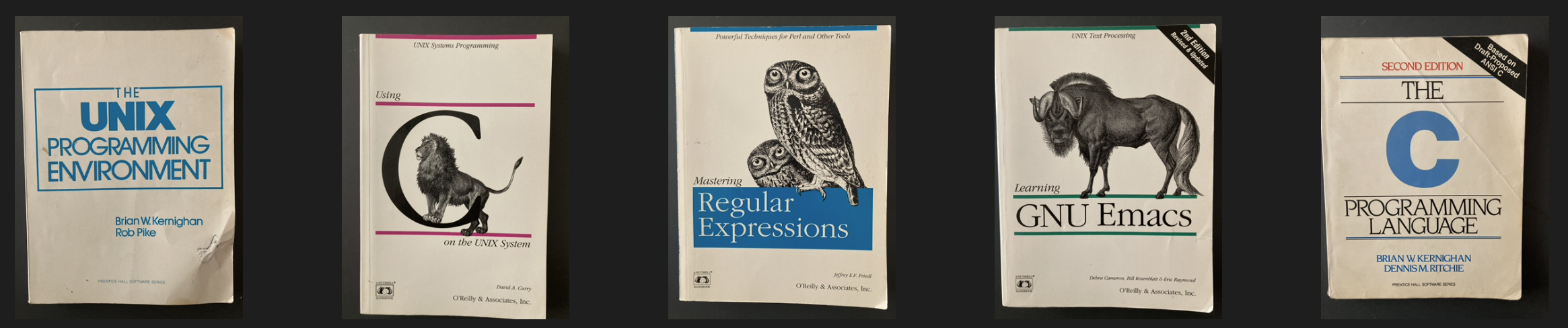

I saved my favorite books from this time period mostly as a reminder how much it made an impression on me. Kernighan later wrote "The Go Programming Language" which is one of the best programming books ever.

Linux kernel version 1.x

Micron Millennia desktop PC

Also circa '94 I installed Linux on my home computer which was a Micron. Manufactured in Idaho (It came from the factory with a potato sticker on it). I experimented a lot with building the Linux kernel from sources and configuring dual-boot systems. [image attribution: Akbkuku, CC BY 4.0 , via Wikimedia Commons]

'95 Reality Construction Company

Circa '95 in Albuquerque NM, I had graduated from college the year before, and I was really poor and mostly working in restaurants. My friends Kyle, Spiros, Antonio, and I decided to try creating web pages, first for fun, and then for profit. We got a few customers and had this idea to start a tech company. We called it Reality Construction Company (RCC). We were hosting the pages and web apps at a local Internet service provider (ISP), named Southwest Cyberport (SWCP). We were self-taught developers and designers, and were not very good at automating and scripting things. Most (all?) good developers are self-taught though!

'96 Southwest Cyberport

SWCP was growing rapidly, because the Internet was just being commercialized and popularized. The owners of SWCP are a nice couple named Jamey and Mark Costlow. They offered me a technical support job for their Internet access, web hosting, email and FTP services business.

SunOS and Solaris

[image attribution: jonmasters, CC BY-NC-SA 2.0]

As a bonus I got to learn about Unix because the Costlows were also very technical and system administrators. They were running SunOS and Solaris Unix. I guess I was kind of an apprentice sys-admin. At one point early on, there was a folding table with about a dozen of these Sun SPARC aka "pizza boxes". The folding table was sagging from the weight, more than a bit.

I learned about the X-Windows display system and we used early versions of Python, TCL, PHP, Perl, Java, Javascript, MySQL, and Postgres. Customers had access to many of these tools which enabled building of dynamic web pages using CGI and HTML. The web servers were multi-user systems, so learning Unix was mandatory for any kind of databases or interactive web apps.

Tech-Kids

Mark and Jamey were part of a group which someone named "Tech-Kids". I was de-facto part of it because I worked at SWCP, but I was not anywhere near as smart and cool as most of those folks. They had their own Internet Relay Chat (IRC) channel and I remember going to a house party or two.

Over the next several years many of the group moved to Silicon Valley, and I'm sure they were super successful in the Tech industry there. I stayed in New Mexico- as I was already California escapee.

Linux saves the day

I think it was Brendan Conoboy who first introduced Linux into the SWCP system. He quickly deployed it as a software firewall to plug some security holes and put the Sun machines behind a NAT. Before long, all the SunOS and Solaris "pizza boxes" had been migrated to Linux servers. Brendan went on to maintain the GCC compiler, and then moved to a long career at Red Hat Linux.

'97 Linux kernel version 2.x

In these days, multiprocessing (SMP) was added, and the range of supported hardware architectures was broadened. I tried it out on some relatively ancient hardware. Each one of these was like a puzzle to figure out how to load and run the OS and various packages.

Sun SPARCStation SLC

I installed Linux on this little terminal. These were available for free or cheap. It had a monochrome monitor which was pleasing to the eyes. I got X-Windows running, and could browse the web using Netscape Navigator. In grayscale- so cool. It was quiet too because of being fanless. But so slow. [image attribution: David Rosenthal CC BY-SA 3.0 US]

DEC Alpha

I also installed Linux on this Digital Equipment Corp (DEC) workstation. Also available free or cheap. [image attribution: The Centre for Computing History]

macOS X, BSD Unix for the masses

macOS 9 and older had a reputation for instability (crashing, and showing the "bomb" screen), because of it's system architecture.

Circa 2001, macOS X revolutionized all that because it introduced preemptive multitasking, protected memory, and a BSD-Unix based subsystem. Finally an OS with a nice graphical interface, and an actual Unix environment with all the tools and compilers one needs.

The last time I went to a developer conference, there was literally a sea of macOS laptops. Apple really has gained traction with developers as well as creative designers. Of course there is always cross section of hardcore Linux folks to running Lenovo Thinkpads or System 76 laptops. We'll dig into developer mind-share more below.

Linux at National Center for Genome Resources (NCGR)

When I worked at NCGR it was my first exposure to an on-premises data center with a lot of compute and storage. I learned how to parallelize jobs, and submit batches to a cluster of bare metal Linux servers. Lots of Bash shell scripting, Makefiles, Perl and Python. A single genome sequencer instrument can output terabytes of data per day, so the compute and storage requirements were substantial.

Mike Warren's Linux Top500 "stunt" at Descartes Labs

I worked at Descartes Labs when Mike Warren, the CTO, used a cluster of cloud VMs to compete in a supercomputing contest. This was a first time ever for a team to place in the list of top 500 supercomputers using cloud resources. Thunder from the Cloud: 40,000 Cores Running in Concert on AWS is an inspiring story about the democratization of computing.

Taking stock: Windows at USDA

My current role is contractor to the U.S. Department of Agriculture (USDA), Farm Production and Conservation Business Center (FPAC) and Natural Resources Conservation Service (NRCS). I was hired to work on a re-architecture and AWS cloud migration of a legacy application. I was actually very surprised to find that basically the entire system is MS Windows. The status quo for desktop computers is, unsurprisingly, Windows. But the servers, databases, and Extract-Transform-Load (ETL) pipelines are also Windows based. Visual Studio Pro, SQL Server Integration Services (SSIS), and SQL Server Management Studio (SSMS) and Remote desktop are required. Chasing down the required software installations, licenses and elevated privileges for Windows authentication literally takes weeks. Then one is hobbled by GUI-only applications and a very lacking command line experience.

Linux/macOS has indisputably won on Internet servers, web development, as well as scientific computing and High Performance Computing (HPC). So why does it feel like I've teleported back 30 years into a giant corporation? Incredible. How is this even possible? Because that's basically exactly what it is. I was able to more or less sort out the history of how we arrived in this state:

FPAC (Farm Production and Conservation) is a mission area within USDA that includes NRCS (Natural Resources Conservation Service) as one of it's agencies, alongside FSA (Farm Service Agency) and RMA (Risk Management Agency). The Windows infrastructure entrenchment in FPAC largely stems from the 1990s modernization efforts. Key factors:

- FSA's legacy AS/400 systems were migrated to Windows-based solutions

- Large contractors (particularly IBM) implemented Service Center Information Management System (SCIMS) using Microsoft technologies

- County offices standardized on Windows desktops for program delivery

- Custom applications were built on .NET/SQL Server stack

- GIS tools like Toolkit initially developed for Windows platforms

This created a Microsoft-centric ecosystem that persists today, despite NRCS maintaining some Unix/Linux systems for scientific computing and conservation planning tools. [Source: Claude 3.5 Sonnet]

I am advocating for a Docker container (Linux) based development approach, Postgres, and AWS deployment, for the modernization of this particular app.

Postscript

Linux won the Internet and developer mind-share

Numbers reflect 2024 estimates across enterprise and public infrastructure. Source: Claude.ai

Percent of Internet infrastructure

| System Category | Linux/Unix Usage | Notes |

|---|---|---|

| Container Orchestration | ~98% | Kubernetes, Docker dominance |

| Web Servers | ~90% | Apache, Nginx, BSD variants |

| Network Routers | ~95% | Enterprise and carrier-grade |

| Network Switches | ~85% | BSD and Linux variants |

| IoT Devices | ~85% | Android, embedded Linux, BSD |

Developer mind-share

| Developer Category | Linux/Unix/macOS Usage | Key Drivers |

|---|---|---|

| Cloud/DevOps | ~90% | Container ecosystems, server management |

| Embedded/IoT | ~85% | Linux development requirements |

| Security/Pentesting | ~80% | Unix tooling, Kali Linux |

| Data Science | ~75% | Unix tools, Python ecosystem |

| Web Development | ~70% | macOS popularity, cross-platform tools |

| Mobile Dev | ~65% | macOS for iOS, Linux for Android |

| Game Development | ~45% | Windows still dominant |

| Enterprise/.NET | ~35% | Windows prevalence |

macOS is included in the table because it has a BSD Unix subsystem and command line experience, which is explored in the section macOS X, BSD Unix for the masses.

Next steps

I am currently working on AWS Certified Solutions Architect (AWS CSA-A) certification, and plan to pursue the Data Engineering track after that.

🌎 Thanks for rambling! 👋🏼

- 2025-05-02 Clarify some comments about macOS < X